New Verse - AverageDriftAcrossVersions

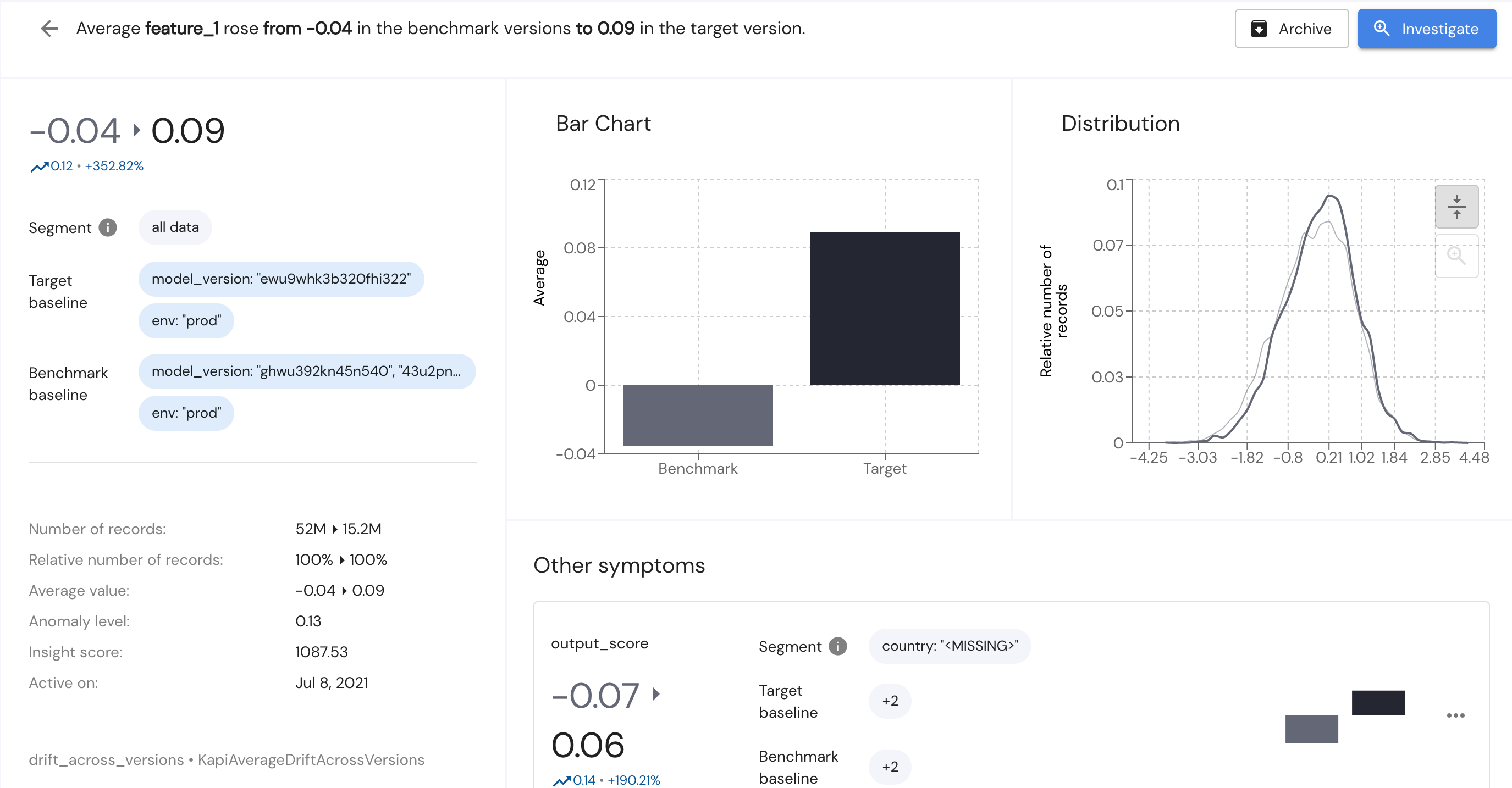

Hi everyone, we know some of you have been waiting for this for a while, so we are very excited to announce our new verse type - AverageDriftAcrossVersions.

With this new verse you will be able to track drifts across different model versions in order to get alerted on specific segments in your data which underperform in newer versions.

This verse is easily configured and does not require you to specify the specific version names.

Using the “version_field” param you will define the field which states the version and then the “order_versions_by” param will determine the order of versions and consequently the latest version, and the “num_versions_benchmark” param will determine the number of previous versions to check against.

{

"drift_across_versions": {

"baseline_segment": {

"stage": [

{

"value": "test"

}

]

},

"segment_by": [

"country",

"state"

],

"metrics": [

"feature_1",

"feature_2",

"feature_3",

"feature_4",

"output_score"

],

"verses": [

{

"type": "AverageDriftAcrossVersions",

"version_field": "model_version",

"num_versions_benchmark": 3,

"order_versions_by": "LATEST_TO_RECEIVE_DATA",

"min_segment_size_fraction": 0.01,

"min_anomaly_level": 0.1

}

]

}

}Drifts across versions occur when the difference between the average of a metric you are tracking in your current model (target) and the average of the same metric in previous versions (benchmark) divided by the STD of both data sets is at least the min_anomaly_level you define.

This verse is useful for production versions but also for versions which are still being tested, or versions in shadow mode. Insights from this verse will give you a more granular understanding of where your latest model version is underperforming.

Further information about this verse can be found in our documentation.